import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import plotly.express as px

from plotly.subplots import make_subplots

import plotly.io as pio

pio.renderers.defaule = 'colab'

from itables import show

# This stops a few warning messages from showing

pd.options.mode.chained_assignment = None

import warnings

warnings.simplefilter(action='ignore', category=FutureWarning)Introduction to Data Science

Data Ethics - Algorithmic Bias

Important Information

- Email: joanna_bieri@redlands.edu

- Office Hours: Duke 209 Click Here for Joanna’s Schedule

Day 14 Assignment - same drill - kindof (no coding)

- Make sure Pull any new content from the class repo - then Copy it over into your working diretory.

- Open the file Day##-HW.ipynb and start doing the problems.

- You can do these problems as you follow along with the lecture notes and video.

- Get as far as you can before class.

- Submit what you have so far Commit and Push to Git.

- Take the daily check in quiz on Canvas.

- Come to class with lots of questions!

If you start having trouble with git!!!

Some people have reported that GIT is disappearing or giving errors on when they try to use it in Jupyter Lab. Here is another option for interacting with git:

If yous start having errors, try downloading this app. I can show you how to use it in class.

Data Science Ethics - Algorithmic Bias

This lecture follows closely to Data Science in a Box Unit 3 - Deck 3. It has been updated to fit the prerequisites and interests of our class and translated to Python.

- What do we mean about Algorithmic Bias

- Where are these algorithms being used?

- What are the human and societal effects?

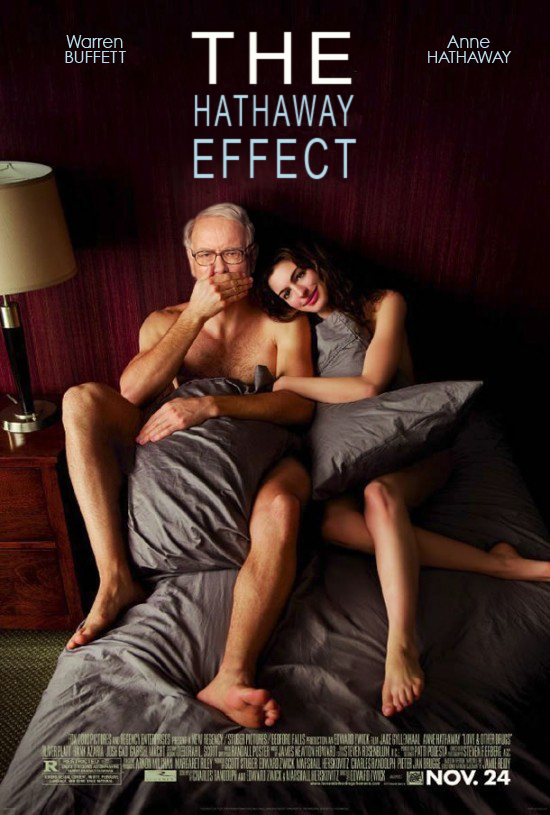

The Hathaway Effect

We will start with a lighthearted example to just explain what we mean by algorithmic bias.

The company Berkshire_Hathaway is owned by Warren Buffett. This analysis looked at certain time points in Anne Hathaway’s career and compared it to Berkshire Hathaway’s stock

- Oct. 3, 2008: Rachel Getting Married opens, BRK.A up 0.44%

- Jan. 5, 2009: Bride Wars opens, BRK.A up 2.61%

- Feb. 8, 2010: Valentine’s Day opens, BRK.A up 1.01%

- March 5, 2010: Alice in Wonderland opens, BRK.A up 0.74%

- Nov. 24, 2010: Love and Other Drugs opens, BRK.A up 1.62%

- Nov. 29, 2010: Anne announced as co-host of the Oscars, BRK.A up 0.25%

Dan Mirvish. The Hathaway Effect: How Anne Gives Warren Buffett a Rise. The Huffington Post. 2 Mar 2011.

Observational study - maybe people are searching for Anne Hathaway and then getting results for Berkshire Hathaway. If stock trading bots pick up on this - could it lead to bots buying more Berkshire stock when Anne makes a movie? Read the article and you be the judge. Do you think there is a real effect here?

How does this illustrate Algorithmic Bias?

- The algorithms, a search engine and trading bots, ware trained to just return the best results for their purpose.

- The algorithms do not know anything about the difference between Anne and Berkshire.

- This could have unintended consequences. Anne’s success leads to success for Berkshire (maybe?).

- BUT imagine how this could go really wrong.

——————

Algorithmic bias and gender

Google Translate

A basic translator that can take sentences and translate them from one language to another. On the left are sentence fragments in Turkish and on the right the English translation.

It is having to choose a gender when translating. How did it do? Do you notice some things that are biased?

Even if the training of this algorithm was unbiased, but the data it trained on was biased, the machine will parrot back the biased information back to us!

Amazon’s experimental hiring algorithm

- Used AI to give job candidates scores ranging from one to five stars – much like shoppers rate products on Amazon

- Amazon’s system was not rating candidates for software developer jobs and other technical posts in a gender-neutral way. - The system taught itself that male candidates were preferable.

- WHY? Because it was trained on past hiring decisions that were biased.

Gender bias was not the only issue. Problems with the data that underpinned the models’ judgments meant that unqualified candidates were often recommended for all manner of jobs, the people said.

Jeffrey Dastin. Amazon scraps secret AI recruiting tool that showed bias against women.

Reuters. 10 Oct 2018.

- Algorithms can only pick up on features in the data. They do not consider the human behind the numbers. They are trained to minimize their “cost function”.

——————

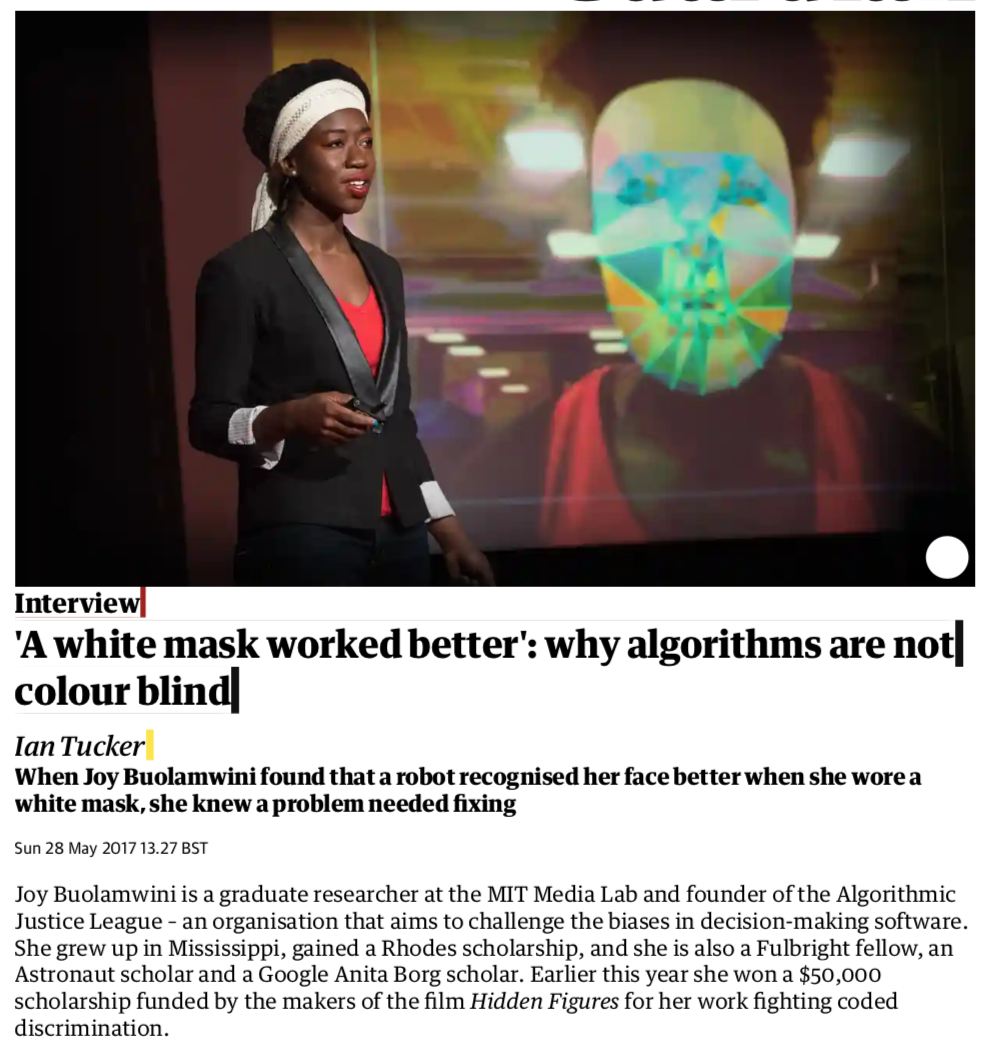

Algorithmic bias and race

Facial recognition

Ian Tucker. ‘A white mask worked better’: why algorithms are not colour blind. The Guardian. 28 May 2017.

- Joy Buolamwini graduate researcher at the MIT media lab and a leader in the Algorithmic Justice League.

- She noted that because the facial recognition algorithms were trained on mostly white faces they did not do a good job of recognizing patterns in non-white faces.

- When she put on a white mask with no human features the algorithm was better at tracking her face than when she wasn’t wearing a mask.

Video - How I’m fighting bias in algorithms | Joy Buolamwini’

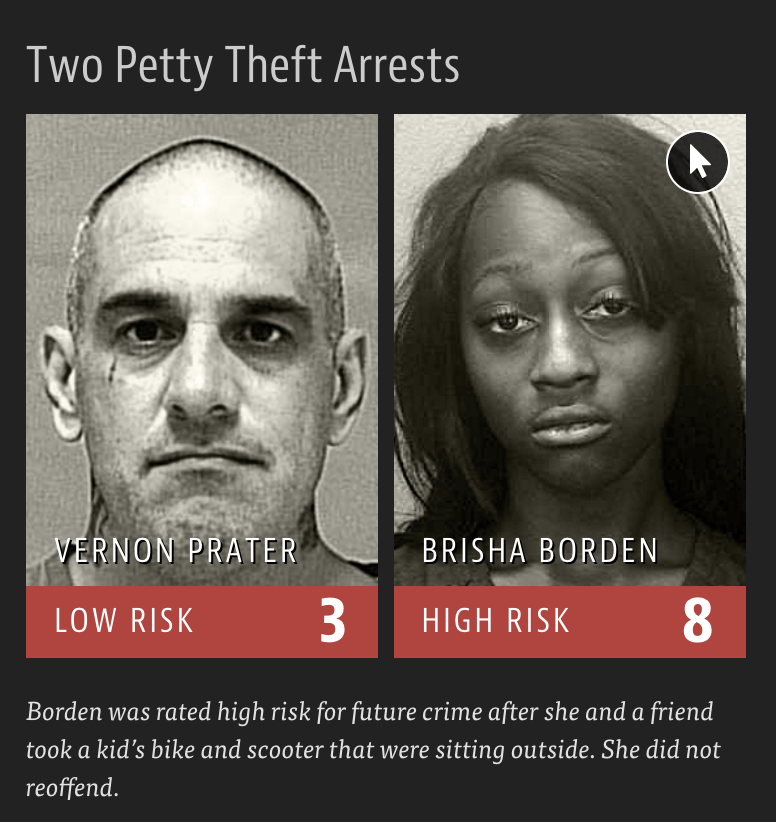

Criminal Sentencing

Software is being used across the country to predict future criminals. And it’s biased against blacks.

Julia Angwin, Jeff Larson, Surya Mattu, and Lauren Kirchner. Machine Bias. 23 May 2016. ProPublica.

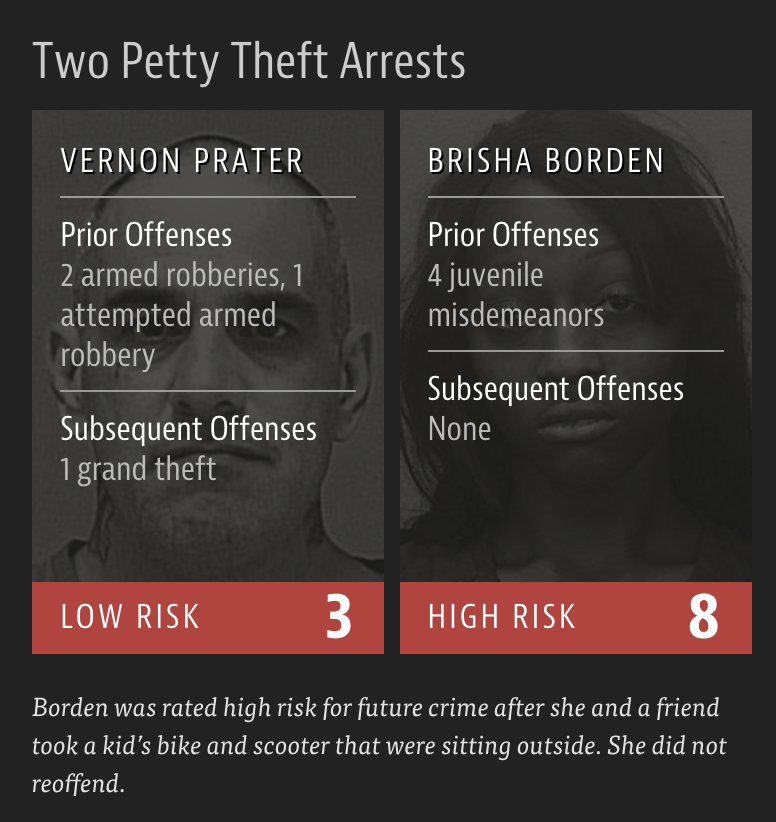

A tale of two convicts

- These two images depict individuals who were each arrested on petty theft.

- Risk is ranking whether they will commit a crime again

Although these measures were crafted with the best of intentions, I am concerned that they inadvertently undermine our efforts to ensure individualized and equal justice,” he said, adding, “they may exacerbate unwarranted and unjust disparities that are already far too common in our criminal justice system and in our society.” - Then U.S. Attorney General Eric Holder (2014)

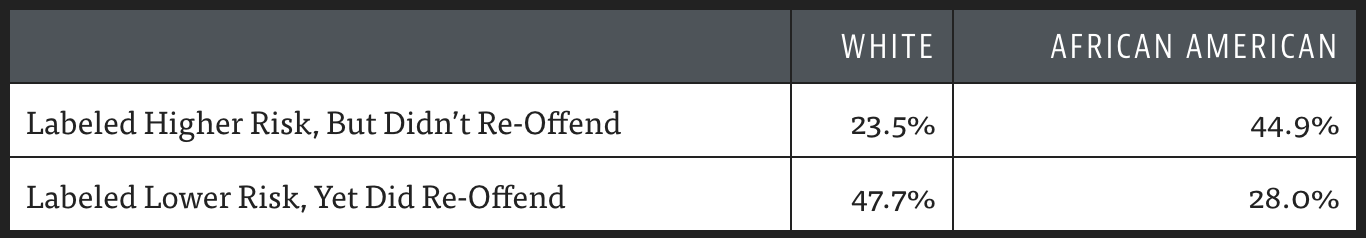

ProPublica analysis - Data:

Risk scores assigned to more than 7,000 people arrested in Broward County, Florida, in 2013 and 2014 + whether they were charged with new crimes over the next two years

ProPublica analysis - Results:

- 20% of those predicted to commit violent crimes actually did

- Algorithm had higher accuracy (61%) when full range of crimes taken into account (e.g. misdemeanors)

- Algorithm was more likely to falsely flag black defendants as future criminals, at almost twice the rate as white defendants

- White defendants were mislabeled as low risk more often than black defendants

- This effects peoples lives!!!

- Actual decisions are being made about the liberty and freedom of these people.

What are our societal believes about these algorithms?

- Maybe people believe that if you take humans out of the decision making, the decisions will be MORE fair.

- Computers are not biased.

- It is better/faster to let the algorithm decide.

What really happens behind the algorithm?

- Algorithms must be trained to make decisions, so we use data from our own society.

- Our society has a history of bias in multiple ways:

- Who is represented in the data is highly dependent on existing bias and access in society.

- Who collected or owns the data could make a big difference.

- Algorithms encode this bias and so biased decisions can come out.

Q What is your response to our discussion of bias in algorithms? Talk about the pluses and minuses of using algorithms to make decisions in our human world.

How to write a racist AI without trying - blog post

How to write a racist AI without really trying. Robyn Speer - July 13, 2017

——————

Further reading

There are so many interesting and deeply troubling examples of algorithmic bias in our world. You job as a Data Scientists is to be aware of when this is happening and hopefully try to stop it.

Machine Bias

Machine Bias by Julia Angwin, Jeff Larson, Surya Mattu, and Lauren Kirchner

- A bit more math background.

Ethics and Data Science

Ethics and Data Science

by Mike Loukides, Hilary Mason, DJ Patil (Free Kindle download)

- Nice overview of the topic.

Weapons of Math Destruction

Weapons of Math Destruction

How Big Data Increases Inequality and Threatens Democracy, by Cathy O’Neil

- Really well written!

Algorithms of Oppression

Algorithms of Oppression How Search Engines Reinforce Racism by Safiya Umoja Noble

- Really interesting and eyeopening.

——————

Data Ethics in your Work

- At some point during your data science learning journey you will learn tools that can be used unethically

- You might also be tempted to use your knowledge in a way that is ethically questionable either because of business goals or for the pursuit of further knowledge (or because your boss told you to do so)

Q How do you train yourself to make the right decisions (or reduce the likelihood of accidentally making the wrong decisions) at those points?

Q How do you respond when you see bias in someones work? How could you take action to educate others?

Do good with data

- Data Science for Social Good:

- DataKind: DataKind brings high-impact organizations together with leading data scientists to use data science in the service of humanity.

——————

Further watching

Weapons of Math Destruction | Cathy O’Neil | Talks at Google

Imagining a Future Free from the Algorithms of Oppression | Safiya Noble | ACL 2019

Whats An Algorithm Got To Do With It

——————

Report on your Data Ethics reading:

Your homework for today is all essay and written work. Make sure you respond to the three questions in the lecture:

Q1 What is your response to our discussion of bias in algorithms? Talk about the pluses and minuses of using algorithms to make decisions in our human world.

Q2 How do you train yourself to make the right decisions (or reduce the likelihood of accidentally making the wrong decisions) at those points?

Q3 How do you respond when you see bias in someones work? How could you take action to educate others?

Report

Write a report about what you learned from your ethics reading exploration. For each book/article you read:

- Include a full proper reference to the book/article.

- BOOK: Author last name, First name. Book Title: Subtitle. Edition, Publisher, Year.

- ONLINE ARTICLE: Author last name, First name. Article Title. Website name, date accessed. html link.

- MLA styles for citing other types of online work

- Write a summary in your own words what the book/article was about. Imagine telling your classmates about what they would learn by reading the article.

- Discuss your own reaction to the book/article. Did it have any effect on how you think about data and ethics? Do you agree with the author? What specific ideas really stood out to you?